Research at SML

Social Media TestDrive: Social Media for Education

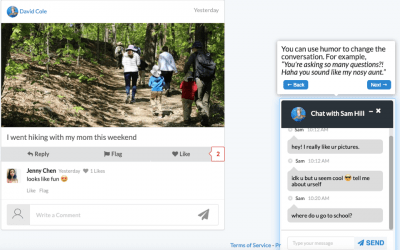

Social Media TestDrive is an interactive educational program developed by the SML, in partnership with Common Sense Education, that offers a number of modules about key digital citizenship topics, such as managing privacy settings, smart self-presentation, upstanding to cyberbullying, and news literacy. Each Social Media TestDrive module teaches a set of digital citizenship concepts and allows youth to practice and reflect upon what they have learned using a simulated social media experience within a safe and protected platform.

Chatbots for cyberbullying bystander education

This study focuses on developing a chatbot system designed to support learners in becoming effective upstanders in cyberbullying situations. We explore what barriers bystanders often face in the intervention process, and build upon these findings to provide effective persuasion strategies and practical advice to train bystanders into standing up against online harassment. By developing such educational tools and programs, we aim to create a tool that enhances users confidence and ability to address cyberbullying by leveraging the flexibility and utility of large language models, contributing to a safer and more supportive online environment.

Tech, health, and policy

As technology increasingly permeates our daily lives, its impact on health and healthcare also increases. In this project, we explore the complexities in emerging various digital threats, as well as how various healthcare tools and policy can respond to them. We also explore how novel technological tools and practices, such as telehealth practices and various digital healthcare systems, are introduced to address healthcare needs, and how they are used in practice. We are currently conducting a study on youth healthcare practitioners knowledge and perspectives regarding digital risk factors and digital abuse, with a particular focus on screening processes. We are interviewing youth practitioners about their experiences addressing digital abuse and related risks, the extent to which they have received practical training to prepare for such cases, and the barriers they face in providing care for affected youth.

Self-Control in Manipulative Algorithmic Environments

Many features of social media platforms and websites – such as infinite scroll and autoplay – are intentionally designed to bypass users reflective decision-making system to deliver effortless, short-term gratifications. While these features feel gratifying in the moment, they are often counterproductive because they undermine meaningful reward and well-being over the long term. Efforts to promote digital self-control have typically focused on technological fixes to override impulsive tendencies. This study takes a different approach. Leveraging insight from cognitive science, it presents a constructive dual-pathway approach to promote digital self-control. Moving beyond deficit-based models that frame self-control failure as a sign of resource depletion, it embraces a process model based on attentional shifting to bring the self-control dilemma into focus, and motivational anchoring to align valuation of choice with long-term rewards. Through a two-wave longitudinal experiment, we test the effects of two cognitive interventions to support emergent adults digital self-control.

Social Norm Project

This study investigates the question: When two competing behaviors coexist, which is perceived as normative? We focus on harassment and objection to harassment in online communities, exploring how their relative frequencies shape perceptions of social norms. Specifically, we examine how exposure to these behaviors influences users perceptions of what is acceptable and how these norms affect individuals intentions to act and their likelihood of objecting to harassment. We conducted experimental studies using the Truman Platform, a simulated social media site, to identify mechanisms behind social norm formation and how others behaviors shape newcomers understanding and actions. We are currently expanding to field studies, with the goal of highlighting the importance of proactive responses and developing an intervention that promotes user-driven upstanding.

Narratives in Counterspeech: A Field Experiment on Social Media

This project investigates the feasibility and effectiveness of narrative-based counterspeech in mitigating objectionable content in real-world online settings, specifically targeting vaccine misinformation and anti-immigrant rhetoric on social media platforms such as Reddit. Furthermore, the study examines whether exposure to such narratives motivates prosocial interventions from bystanders, empowering them to effectively counteract online problematic content.

AI-Counter Speaker

This study investigates how online communities respond to AI agents that engage in counterspeech against problematic content. Counterspeech, or responses that challenge toxic or hateful messages, has shown promise in promoting prosocial norms, but delivering it effectively remains difficult. Recently, generative AI and large language models have been explored as potential tools for producing counterspeech at scale. However, the use of AI in this role introduces new questions. Can AI agents be seen as legitimate and authentic voices in community discourse? Will people accept moral or empathetic messages from nonhuman sources? And should AI agents present themselves as fellow community members or simply as technical tools? This study aims to address these questions by examining the effectiveness and reception of AI-generated counterspeech in online communities.