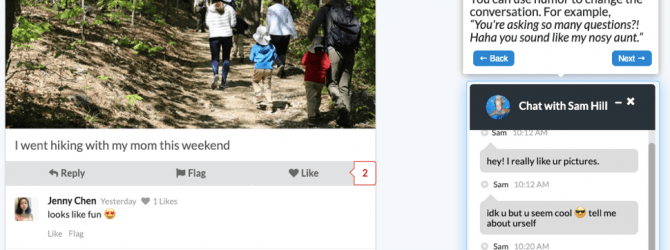

A brief screen recording of Social Media TestDrive.

Social Media TestDrive

Social Media TestDrive is an interactive educational program developed by the SML, in partnership with Common Sense Education, that offers a number of modules about key digital citizenship topics, such as managing privacy settings, smart self-presentation, upstanding to cyberbullying, and news literacy. Each Social Media TestDrive module teaches a set of digital citizenship concepts and allows youth to practice and reflect upon what they have learned using a simulated social media experience within a safe and protected platform.

In August 2019, Social Media TestDrive underwent a nationwide launch alongside Common Sense Education’s new Digital Citizenship curriculum. Currently, 9 Social Media TestDrive modules are linked as extension activities to the corresponding Common Sense Education Digital Citizenship lessons and are freely available to the public. As of April 2020, over 44,000 people have used the tool. You can read more about our most recent updates to the Social Media TestDrive curriculum here.

In Spring 2020, the team was awarded a $300,000 NSF Early-concept Grants for Exploratory Research (EAGER) grant from the Secure and Trustworthy Cyberspace program, titled Addressing Social Media-Related Cybersecurity and Privacy Risks with Experiential Learning Interventions. This grant will support research to identify and create targeted interventions for subgroups of middle school youth that face higher privacy risks online. Another goal of this research is to extend the reach of Social Media TestDrive to a population it was not originally designed for, older adults. To do this, we will use an inclusive privacy-based approach to identify needs and concerns specific to older adults, and then develop and evaluate an intervention suited to their needs.

Starting in Fall 2020, the team will start conducting a multisite outcome evaluation of the Social Media TestDrive platform, using surveys, focus groups, and one-on-one interviews. This outcome evaluation research is a critical next step for understanding and improving the effectiveness of Social Media TestDrive in how it affects digital literacy knowledge, attitudes, skills, and behaviors. This research will be conducted in collaboration with our community partners, New York State 4-H and Cornell PRYDE.

For more information and access to the Social Media TestDrive modules, please visit https://socialmediatestdrive.org/.

Related Publications

DiFranzo, D., Choi, Y.H., Purington, A., Taft, J.G., Whitlock, J., Bazarova, N.N. (2019). Social Media TestDrive: Real-World Social Media Education for the Next Generation. In Proceedings of the 2019 ACM Conference on Human Factors in Computing Systems (CHI’19). Glasgow, UK.

Making Social Media a Positive Experience for Young Users (March 26, 2020), Psychology Today.

Test-Drive Social Media for Digital Citizenship (August 19, 2019), Psychology Today.